CS224 Lecture1 Word2Vec

文章目录

CS224 Lecture1 - Word2Vec

1 Introduction of Natural Language Processing

Natural Language is a discrete/symbolic/categorical system.

1.1 Examples of tasks

There are many different levels in NLP. For instance:

Easy:

-

Spell Checking

-

Keywords Search

-

Finding Synonyms

-

Synonym: 同义词 noun

Medium

-

Parsing information from websites, documents, etc.

-

Parse: 对句子进行语法分析 verb

Hard

-

Machine Translation

-

Semantic Analysis (What is the meaning of query statement?)

-

Coreference (e.g. What does “he” or “it” refer to given a document?)

Coreference: 共指关系,指代的词

-

Question Answering

1.2 How to represent words?

1.2.1 WordNet

-

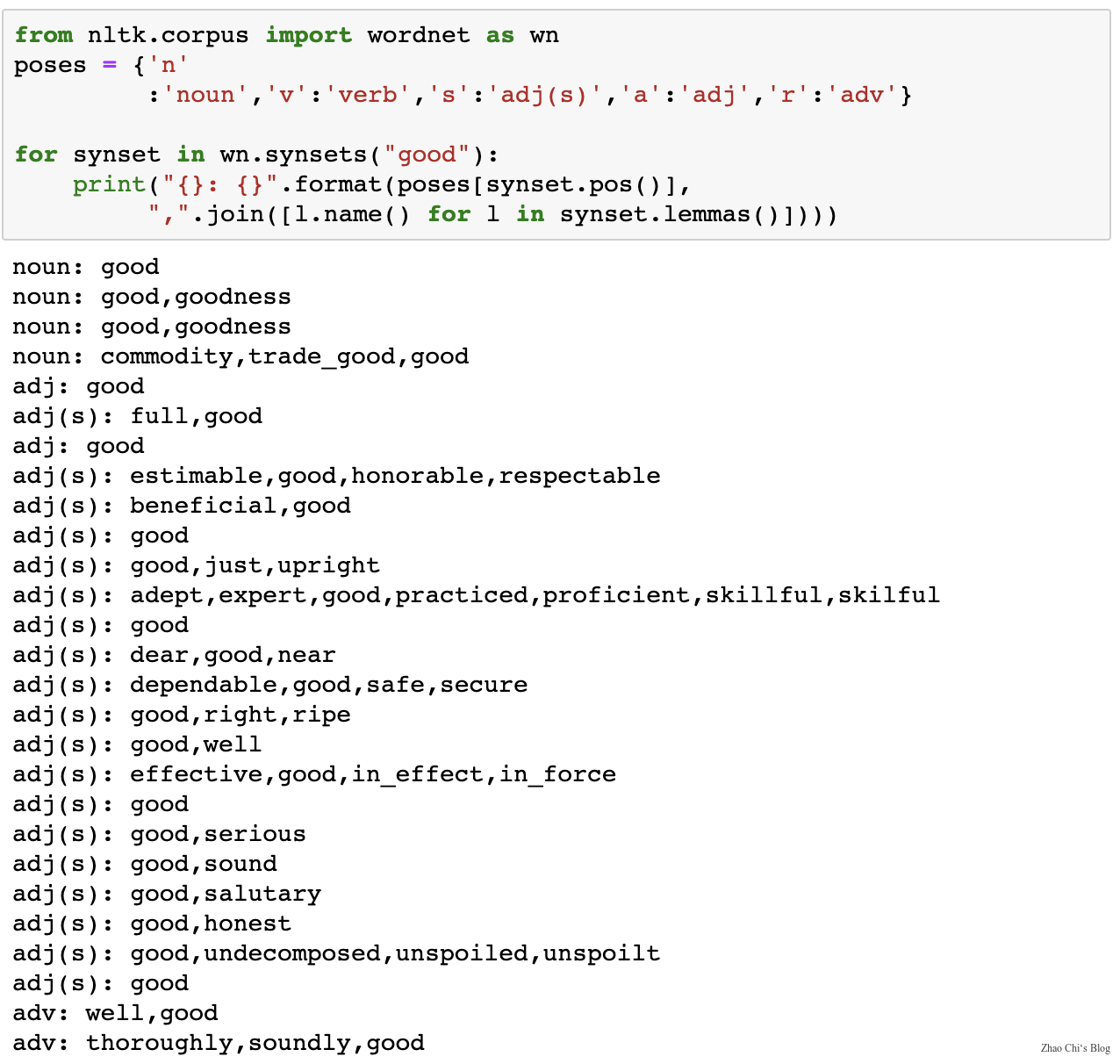

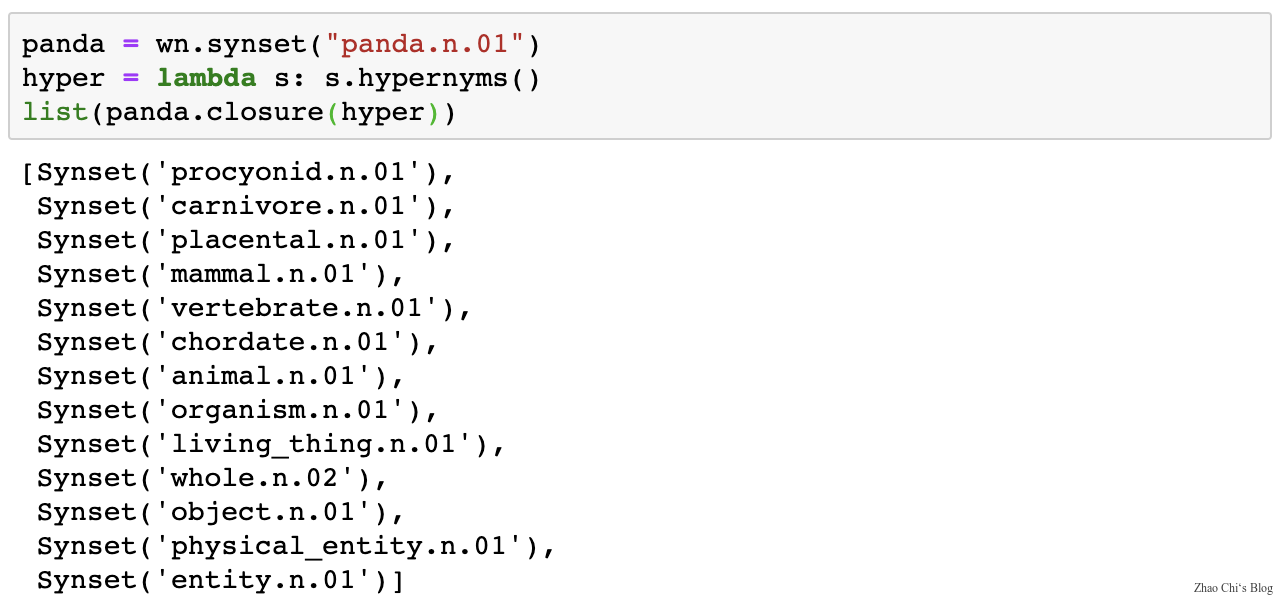

Use e.g. WordNet, a thesaurus containing lists of synonym sets and hypernyms (“is a” relationships).

hypernym: 上位词

Problems with resources like WordNet

- Great as a resource but missing nuance (e.g. “proficient” is listed as a synonym for “good”, This is only correct in some contexts.)

- Missing new meanings of words

- Subjective

- Requires human labor to create and adapt

- Can’t compute accurate word similarity

1.2.2 Representing words as discrete symbols

In traditional NLP, we regard words as discrete symbols: hotel, conference, motel - a localist representation.

Words can be represented by one-hot vectors, one-hot means one 1 and the rest are 0. Vector dimension = number of words in vocabulary.

-

Problem with words as discrete symbols:

For example, if user searches for “Seattle motel”, we would like to match documents containing “Seattle hotel”.

But:

These two vectors are orthogonal. But for one-hot vectors didn’t shown there’s natural notion of similarity.

-

Solution:

-

Could try to rely on WordNet’s list of synonyms to get similarity?

- But it is well-known to fail badly: incompleteness, etc.

-

Instead: Learn to encode similarity in the vectors themselves

-

1.2.3 Representing words by their context

-

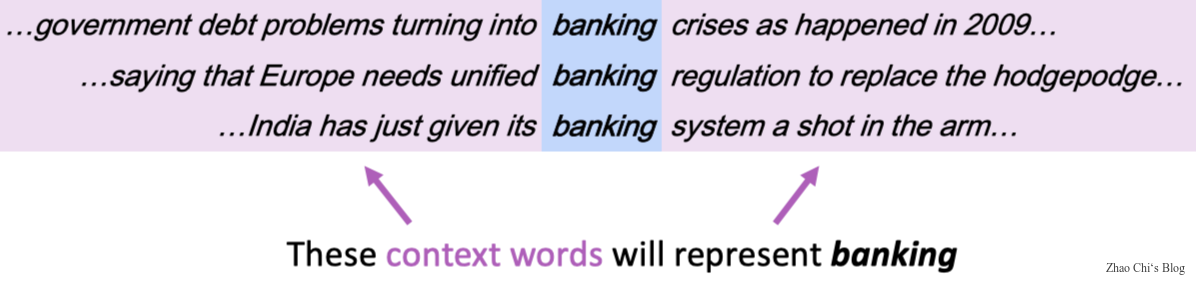

Distributional semantics: A word’s meaning is given by the words that frequently appear close-by.

- “You shall know a word by the company it keeps”(J.R. Firth 1957: 11)

- One of the most successful ideas of modern statistical NLP

-

When a word “

-

Use the many contexts of “

2 Word2Vec

-

Word Vectors: In word2vec, a distributed representation of a word is used. Each word is represented by a distribution of weights across those elements. So instead of a one-to-one mapping between an element in the vector and a word, the representation of a word is spread across all of the elements in the vector, and each element in the vector contributes to the definition of many words.

Note: Word vectors are sometimes called word embeddings or word representations. They are a distributed representation.

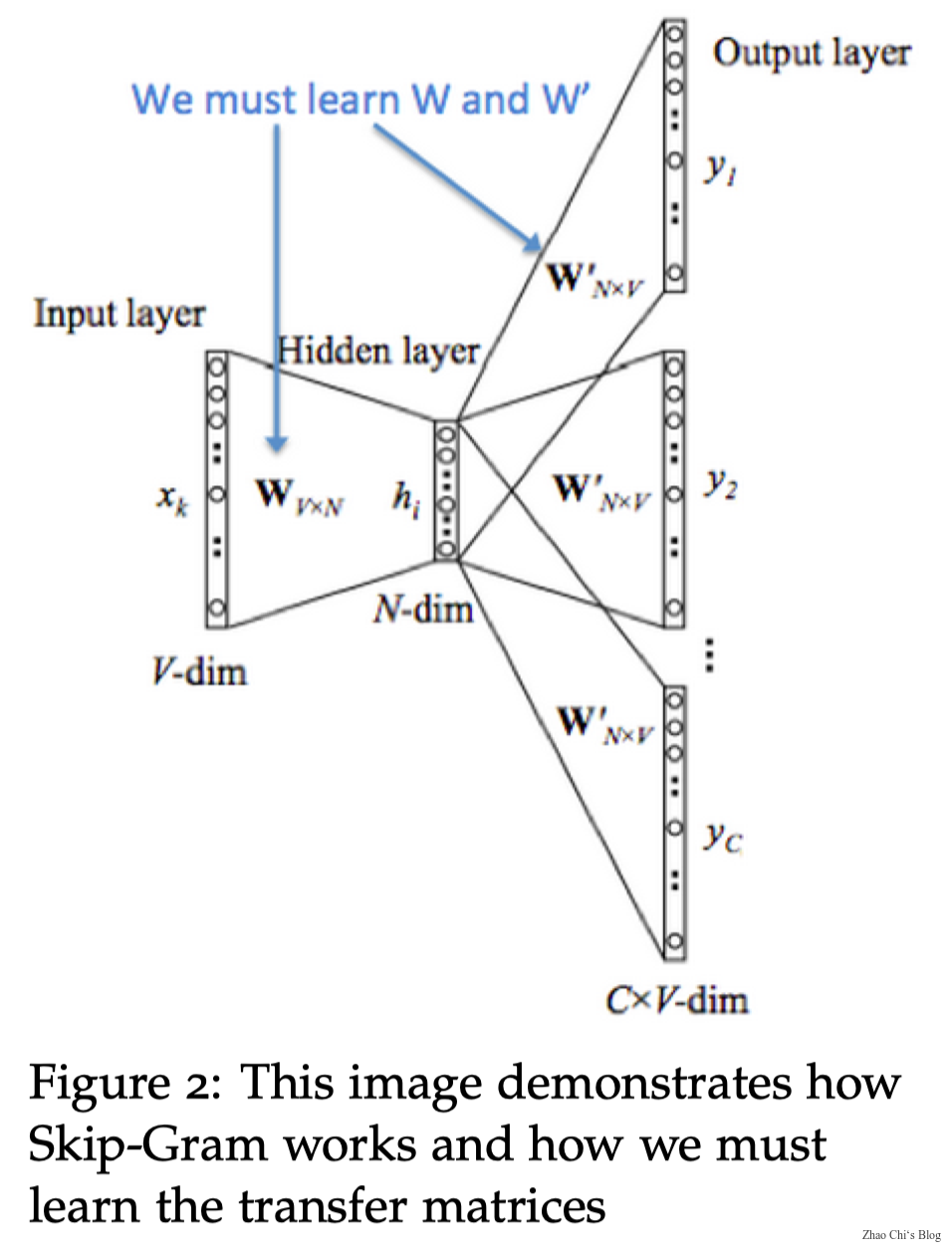

2.1 Word2Vec: Overview

Word2vec (Mikolov et al. 2013) is a framework for learning word vectors.

Idea:

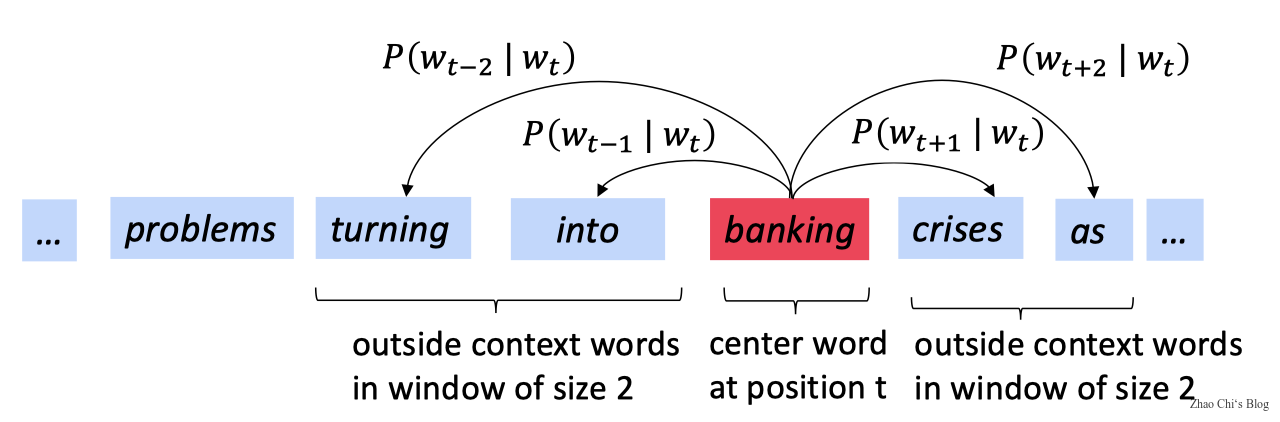

- We have a large corpus of text

- Every word in a fixed vocabulary is represented by a vector

- Go through each position

- Use the similarity of the word vectors for

- Keep adjusting the word vectors to maximize this probability

2.2 Word2Vec: Objective Function

Example windows and process for computing:

For each position,

where

-

The objective function

Sometimes, objective function called

So, our target that maximizing predictive accuracy is equivalent to minimizing objective function.

Minimizing objective function

How to calculate

To calculate

Then for a center word

In the formula above

The follow is an example of

Softmax Function apply the standard exponential function to each element

The softmax function above maps arbitrary values

- Frequently used in Deep Learning.

Useful basics about derivative:

Write out with indices can proof it.

We need to minimize

minimize

Take the partial of

The statement above illustrate the skip-gram language model and how to update the parameters of